Weight Agnostic Neural Networks

Luo Yi

Dec. 12, 2019

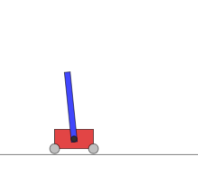

Pros & Cons of WANNs

Experiments with Reinforcement Learning Tasks

- Swing Up

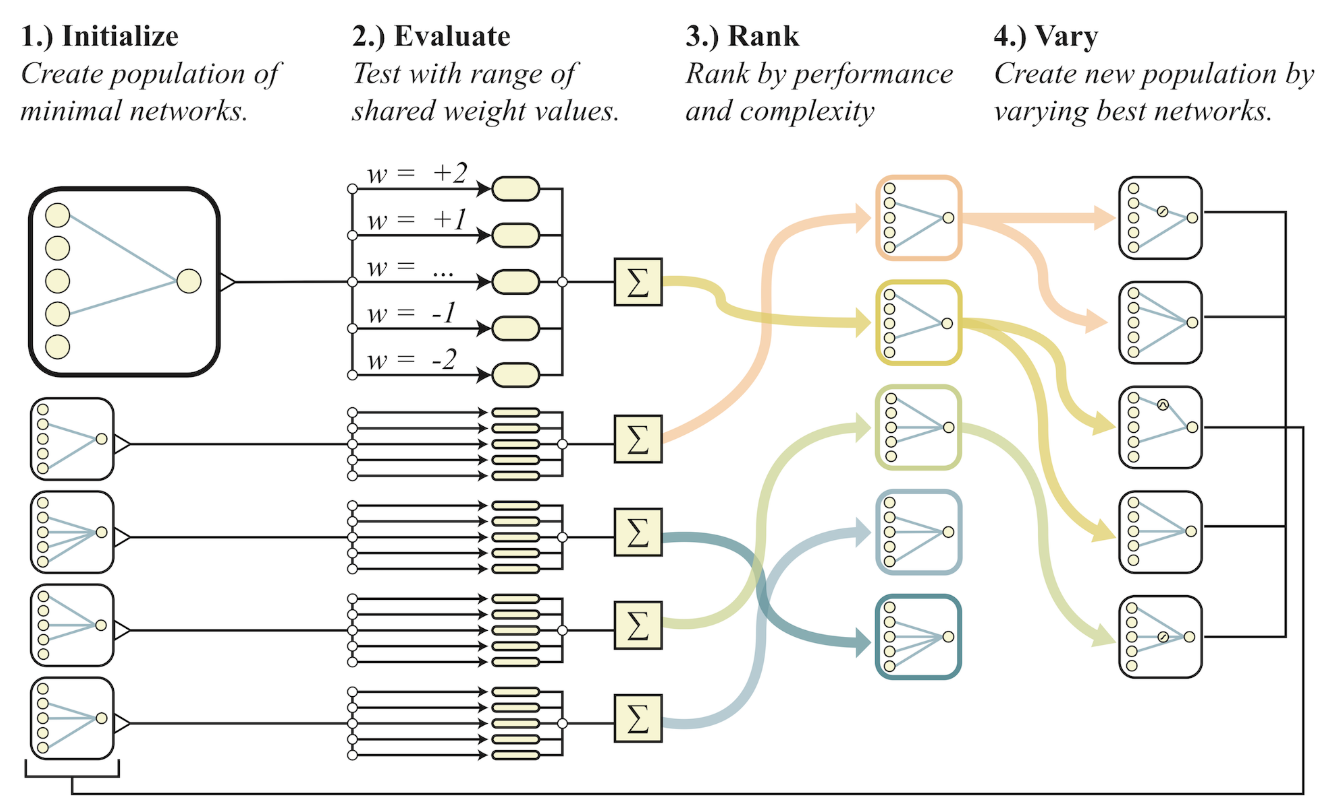

- Biped

- CarRacing

Performance Along with Fixed Topology

Weights\score\Swing Up:WANN,Fixed Topology

Random,57,21

Random Shared,515,7

Tuned Shared,723,8

Tuned,932,918

Weights\score\Biped:WANN,Fixed Topology

Random,-46,-129

Random Shared,51,-107

Tuned Shared,261,-35

Tuned,332,347

Weights\score\CarRacing:WANN,Fixed Topology

Random,-69,-82

Random Shared,375,-85

Tuned Shared,608,-37

Tuned,893,906

Why?

WANNs' weights are trained to be so.

- Without training, it can do.

- With little training, it's near optimal.

Parameters and HyperParameters

But it is unfair.

WANNs' architectures are shrunk to be so.

Can WANN beat NAS? I wonder.

Page Not Found

Try to search through the entire repo.